|

Getting your Trinity Audio player ready...

|

AI Security has reached a critical inflection point where widespread adoption is colliding with inadequate protection capabilities. A comprehensive survey of 100 cloud architects, engineers, and security leaders reveals that while 87% of organizations are actively using AI services, only 13% have implemented AI-specific security controls—creating a dangerous gap between innovation speed and security maturity.

Table of Contents

Current State of AI Security

The survey data paints a clear picture of rapid AI integration across cloud environments. Organizations are leveraging AI services such as OpenAI, Amazon Bedrock, and other managed platforms at unprecedented rates, with AI becoming embedded in daily operations rather than remaining experimental

AI Adoption vs Security Expertise Gap

The most striking finding from the survey reveals a dangerous disconnect: 87% of organizations are actively using AI services, primarily through platforms like OpenAI, Amazon Bedrock, and Google Vertex AI. However, this widespread adoption hasn’t been matched by adequate security measures or expertise.

The speed of AI implementation across enterprises has been remarkable. Organizations are leveraging AI for various functions including customer service automation, content generation, data analysis, and decision-making processes. Yet this rapid deployment often occurs without proper security assessments or governance frameworks in place.

This gap between adoption and security readiness creates significant vulnerabilities that threat actors are increasingly targeting. AI-powered cyberattacks are becoming more sophisticated, with 58% of organizations reporting increased AI-powered attacks. These attacks leverage AI’s capabilities to automate reconnaissance, create personalized phishing campaigns, and execute complex multi-stage attacks.

The Critical Skills Shortage

Perhaps the most concerning finding is that 31% of security leaders identify lack of AI security expertise as their primary challenge. This represents the most commonly cited obstacle across all surveyed organizations, highlighting a fundamental skills gap in the cybersecurity workforce.

Security teams are being asked to protect AI systems they may not fully understand. Traditional cybersecurity professionals often lack the specialized knowledge needed to secure machine learning models, data pipelines, and AI-specific attack vectors. This expertise gap creates growing risk surfaces as organizations deploy AI without adequate oversight.

The challenge is compounded by the rapid evolution of AI technologies. New AI frameworks, models, and deployment methods emerge frequently, making it difficult for security professionals to stay current with the latest threats and protective measures. Only 40% of organizations report having 100% endpoint security coverage, indicating broader visibility challenges in AI environments.

Traditional Security Tools Aren’t Enough

The survey reveals a troubling reliance on conventional security measures that weren’t designed for AI workloads. Only 13% of organizations have adopted AI-specific security posture management (AI-SPM) tools, while most continue using traditional approaches:

- 53% implement secure development practices

- 41% rely on tenant isolation

- 35% conduct regular audits to uncover shadow AI

- 70% still depend primarily on Endpoint Detection and Response (EDR)

While these foundational controls remain important, they cannot address AI-specific risks such as:

- Model poisoning attacks that corrupt training data

- Adversarial examples designed to fool AI systems

- Data extraction through model inference

- Prompt injection attacks on language models

- Model theft and intellectual property violations

The Shadow AI Challenge

Shadow AI represents one of the most significant governance challenges facing organizations today. This refers to the unauthorized use of AI tools by employees without IT oversight or approval. The survey findings indicate that 25% of organizations don’t even know what AI services are currently running in their environments.

Shadow AI emerges when employees seek to improve productivity by adopting AI tools independently, bypassing formal approval processes. While the intent is often positive, this creates serious risks:

Data Exposure Risks

Employees may inadvertently share sensitive information with public AI models when using unauthorized tools. Over one-third of employees admit to sharing sensitive work data with AI tools without employer permission. This can lead to:

- Confidential business information being exposed to third-party platforms

- Customer data being processed by unvetted AI services

- Intellectual property being inadvertently shared with competitors

Compliance Violations

Many industries have strict regulations regarding data usage and privacy. Unauthorized AI tool usage can violate GDPR, HIPAA, SOX, and other regulatory requirements, potentially resulting in:

- Significant financial penalties

- Legal liability exposure

- Reputational damage

- Loss of customer trust

Security Blind Spots

When AI tools operate outside IT oversight, security teams lose visibility into potential threats. This creates unmonitored attack surfaces where external threats can exploit unsecured AI interactions.

Cloud Complexity Amplifies Risks

Modern organizations operate in increasingly complex cloud environments, with 45% using hybrid cloud architectures and 33% operating in multi-cloud environments. This complexity significantly amplifies AI security challenges.

The distributed nature of hybrid and multi-cloud deployments makes it difficult to maintain consistent security policies and monitoring across all AI workloads. Only about one-third of organizations are using cloud-native security platforms like Cloud Security Posture Management (CSPM) or Cloud-Native Application Protection Platforms (CNAPP).

Multi-Cloud Security Challenges

Organizations using multiple cloud providers face additional complexities:

- Inconsistent security controls across platforms

- Different compliance requirements per cloud provider

- Varied AI service capabilities and security models

- Complex identity and access management across clouds

- Difficulty in maintaining unified visibility and monitoring

Infrastructure Scale Issues

Non-human identities now outnumber human identities by an average of 50 to 1 in cloud environments. This massive scale of automated processes and service accounts creates extensive attack surfaces. 78% of organizations have at least one IAM role that hasn’t been used in more than 90 days, representing potential security risks from unused but privileged accounts.

Understanding AI-SPM (AI Security Posture Management)

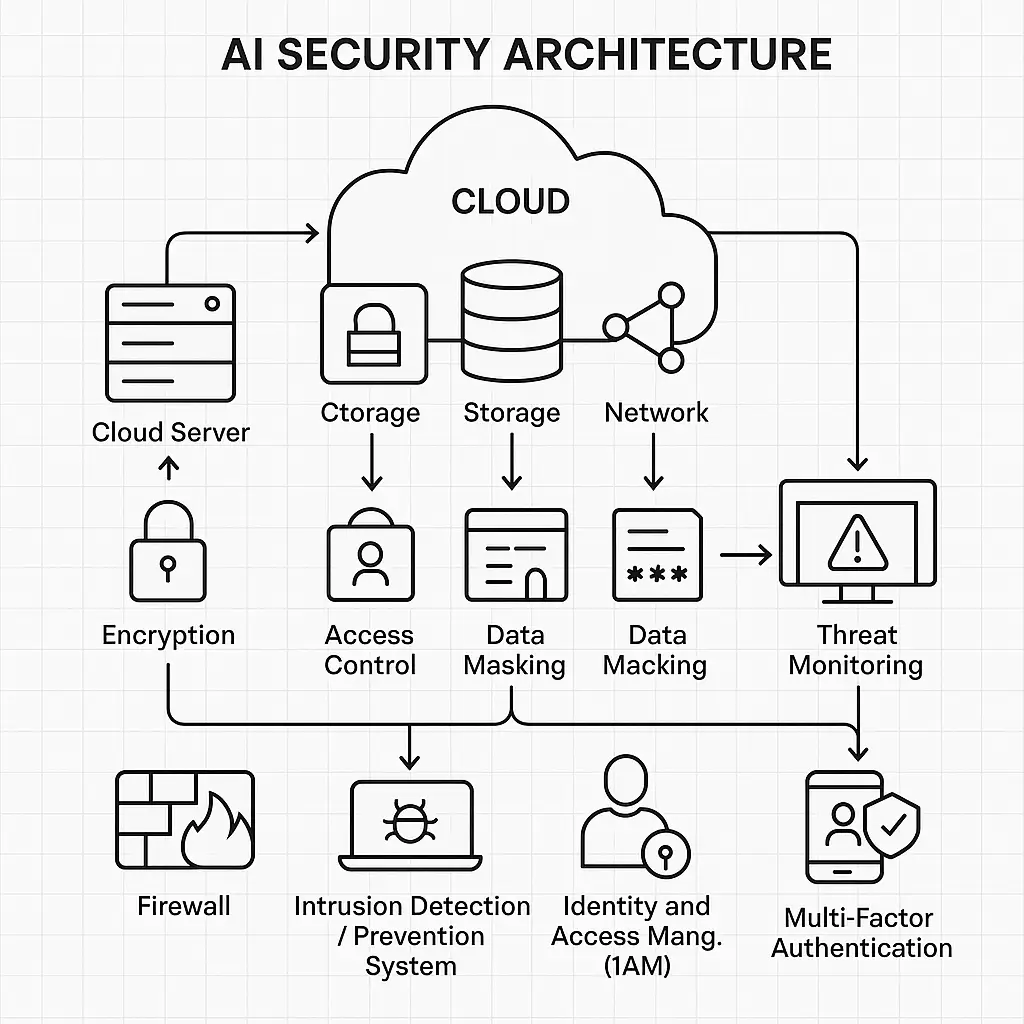

AI Security Posture Management (AI-SPM) represents a specialized approach to securing AI systems throughout their lifecycle. Unlike traditional security tools, AI-SPM provides comprehensive visibility and control specifically designed for AI workloads.

Core AI-SPM Capabilities

Discovery and Inventory: AI-SPM solutions scan cloud environments to identify all AI models, associated resources, data sources, and pipelines involved in training, fine-tuning, or deploying AI systems.

Risk Assessment: These tools analyze the complete AI supply chain, including source data, libraries, APIs, and deployment configurations to identify vulnerabilities and misconfigurations.

Continuous Monitoring: AI-SPM provides ongoing assessment of AI system security posture, detecting configuration drift, unauthorized access, and potential threats.

Policy Enforcement: Automated enforcement of security policies across AI development and deployment environments ensures consistent compliance with organizational standards.

Why AI-SPM is Essential

Traditional security tools weren’t designed to address AI-specific risks. AI-SPM fills critical gaps by:

- Securing Model Lifecycles: Protecting AI models from development through deployment and ongoing operation

- Data Governance: Ensuring sensitive data used in AI training and inference is properly protected

- Threat Detection: Identifying AI-specific attacks like model poisoning, adversarial examples, and prompt injection

- Compliance Management: Automating compliance with AI-specific regulations and standards

Key Security Threats and Vulnerabilities

The AI threat landscape encompasses both traditional cybersecurity risks and new attack vectors unique to AI systems. Understanding these threats is crucial for developing effective defense strategies.

AI-Specific Attack Vectors

Data Poisoning: Attackers inject malicious data into training datasets to compromise model integrity. This can cause AI systems to make incorrect decisions or classifications, potentially leading to business disruption or security breaches.

Adversarial Attacks: These involve crafted inputs designed to fool AI models into making incorrect predictions. Adversarial examples can be subtle modifications that are imperceptible to humans but cause AI systems to fail dramatically.

Model Extraction: Attackers attempt to steal AI models by querying them repeatedly and reverse-engineering their behavior. This represents a significant intellectual property threat for organizations that have invested heavily in developing proprietary AI capabilities.

Prompt Injection: In language models and chatbots, attackers craft malicious prompts designed to bypass safety controls and extract sensitive information or cause unintended behaviors.

Traditional Threats Amplified by AI

Automated Attack Campaigns: AI enables cybercriminals to automate and scale their attacks. AI-powered cyberattacks can automatically identify vulnerabilities, customize attacks for specific targets, and execute sophisticated multi-stage campaigns.

Social Engineering: AI enhances the effectiveness of phishing and social engineering attacks by enabling hyper-personalized messages based on scraped public information from social media and corporate websites.

Credential Stuffing and Password Attacks: AI can accelerate password cracking attempts and improve the success rates of credential stuffing attacks by analyzing patterns in leaked password databases.

Emerging AI Security Risks

62% of organizations have at least one vulnerable AI package, indicating widespread exposure to known AI-related vulnerabilities. Some of the most concerning AI-related Common Vulnerabilities and Exposures (CVEs) enable remote code execution, allowing attackers to take complete control of AI systems.

Large Language Model (LLM) Attacks: Half of organizations report their large language models have been targeted. These attacks can involve:

- Jailbreaking attempts to bypass safety constraints

- Data extraction through clever prompt engineering

- Model manipulation to produce biased or harmful outputs

Best Practices for Enterprise AI Security

Implementing comprehensive AI security requires a multi-layered approach that addresses the unique challenges of AI systems while maintaining operational efficiency.

Data Protection Strategies

End-to-End Encryption: All sensitive data used in AI training and inference should be encrypted both at rest and in transit. This includes training datasets, model parameters, and inference results.

Data Minimization: Collect and process only the data necessary for specific AI use cases. Avoid speculative or “just in case” data collection that increases exposure risks.

Privacy-Enhancing Technologies: Implement advanced techniques such as:

- Differential privacy to anonymize data while preserving utility

- Federated learning to train models without centralizing data

- Homomorphic encryption to perform computations on encrypted data

Access Control and Identity Management

Role-Based Access Control (RBAC): Implement granular permissions that limit access to AI resources based on job functions and principle of least privilege.

Multi-Factor Authentication (MFA): Require strong authentication for all access to AI development and deployment environments.

Regular Access Reviews: Conduct periodic audits of user access rights and remove unused or excessive permissions. Given that 78% of organizations have unused IAM roles, this represents a critical security improvement opportunity.

Secure Development Practices

AI Model Security Testing: Conduct regular security assessments including:

- Adversarial testing to identify model vulnerabilities

- Data leakage testing to ensure models don’t expose training data

- Robustness testing under various attack scenarios

Secure Model Storage: Protect AI models using encryption and secure storage solutions. Implement version control and audit trails for all model changes.

Supply Chain Security: Verify the integrity and security of all AI libraries, frameworks, and third-party components used in development.

Monitoring and Incident Response

Real-Time Monitoring: Implement continuous monitoring of AI systems to detect anomalies, unauthorized access attempts, and potential attacks.

AI-Specific Incident Response: Develop incident response procedures tailored to AI security events, including model compromise, data poisoning, and adversarial attacks.

Performance Monitoring: Track AI model performance over time to detect signs of degradation that might indicate security compromises.

Building an Effective AI Governance Framework

Successful AI governance requires a structured approach that balances innovation with security, compliance, and ethical considerations.

Establishing Governance Structure

Cross-Functional Teams: Form AI governance committees that include representatives from IT, legal, compliance, HR, and business units. This ensures all perspectives are considered in AI deployment decisions.

Executive Leadership: Assign a senior executive to champion AI governance initiatives. Senior leadership alignment is crucial for ensuring governance frameworks gain organizational traction.

Clear Roles and Responsibilities: Define specific roles such as:

- Chief AI Ethics Officer

- AI Security Architect

- Data Protection Officer

- AI Risk Manager

Policy Development and Implementation

AI Usage Policies: Develop clear guidelines governing AI tool adoption, data usage, and acceptable use cases. Only 10% of organizations have formal AI policies in place, representing a significant gap that needs immediate attention.

Risk Classification: Categorize AI use cases by risk level:

- High-risk: Credit scoring, healthcare diagnostics, hiring decisions

- Medium-risk: Customer segmentation, pricing algorithms

- Low-risk: Inventory forecasting, content suggestions

Compliance Mapping: Align AI governance with relevant regulations such as GDPR, CCPA, HIPAA, and emerging AI-specific legislation like the EU AI Act.

Implementation Best Practices

Privacy by Design: Integrate privacy protections into AI systems from the initial design phase rather than adding them later.

Continuous Risk Assessment: Regularly evaluate AI systems for new risks and vulnerabilities as technologies and threat landscapes evolve.

Employee Training: Provide comprehensive training on AI ethics, security best practices, and governance requirements for all staff involved in AI projects.

Stakeholder Communication: Maintain transparency with customers, partners, and regulators about AI governance practices and risk mitigation efforts.

The Future of AI Security

The AI security landscape continues evolving rapidly, with new challenges and solutions emerging regularly. Organizations must prepare for future developments while addressing current vulnerabilities.

Regulatory Evolution

AI-specific regulations are becoming more common globally. The EU AI Act represents one of the most comprehensive frameworks, while other jurisdictions are developing their own approaches. Organizations must stay informed about regulatory developments and ensure their governance frameworks can adapt to new requirements.

Technology Advancement

AI Security Tools Maturation: AI-SPM and related security technologies are rapidly advancing. Organizations should evaluate emerging solutions and plan for migration from traditional security tools to AI-specific platforms.

Zero Trust for AI: The principles of Zero Trust security are being extended to AI systems, requiring continuous verification and minimal trust assumptions for all AI components and interactions.

Automated Security: AI is increasingly being used to defend against AI-powered attacks, creating an arms race between attackers and defenders using similar technologies.

Organizational Preparedness

Skills Development: Investing in AI security training and hiring specialists will become increasingly critical as the skills gap widens.

Culture Change: Organizations must foster a culture that values both innovation and security, ensuring AI adoption doesn’t compromise organizational safety.

Collaborative Defense: Industry collaboration on AI security threats and best practices will become essential for staying ahead of evolving risks.

References:

Mastering secure AI on Google Cloud, a practical guide for enterprises

Secure AI – Cloud Adoption Framework

Q & A Section

What percentage of organizations are currently using AI services?

According to the survey, 87% of organizations are actively using AI services, primarily through platforms like OpenAI, Amazon Bedrock, and Google Vertex AI.

What is the biggest challenge organizations face with AI security?

The lack of AI security expertise is the top challenge, cited by 31% of security leaders. This skills gap creates significant risks as teams are asked to protect systems they may not fully understand.

What is Shadow AI and why is it dangerous?

Shadow AI refers to unauthorized AI tool usage by employees without IT oversight. It’s dangerous because 25% of organizations don’t know what AI services are running in their environments, creating security blind spots and potential data exposure risks.

How many organizations use AI-specific security tools?

Only 13% of organizations have adopted AI-specific security posture management (AI-SPM) tools, with most still relying on traditional security measures not designed for AI workloads.

What are the main AI-specific security threats?

Key threats include data poisoning, adversarial attacks, model extraction, prompt injection, and AI-powered cyberattacks. 62% of organizations have at least one vulnerable AI package.

How can organizations improve their AI security posture?

Organizations should implement AI-SPM tools, develop formal AI governance frameworks, provide security training, establish clear policies, and use privacy-enhancing technologies like encryption and access controls.

What role does cloud complexity play in AI security challenges?

With 45% using hybrid cloud and 33% using multi-cloud environments, organizations face increased complexity in maintaining consistent security policies and monitoring across distributed AI workloads.

What is AI Security Posture Management (AI-SPM)?

AI-SPM is a specialized approach that provides continuous monitoring, risk assessment, and security management specifically designed for AI systems throughout their lifecycle, addressing unique AI-specific vulnerabilities.