|

Getting your Trinity Audio player ready... |

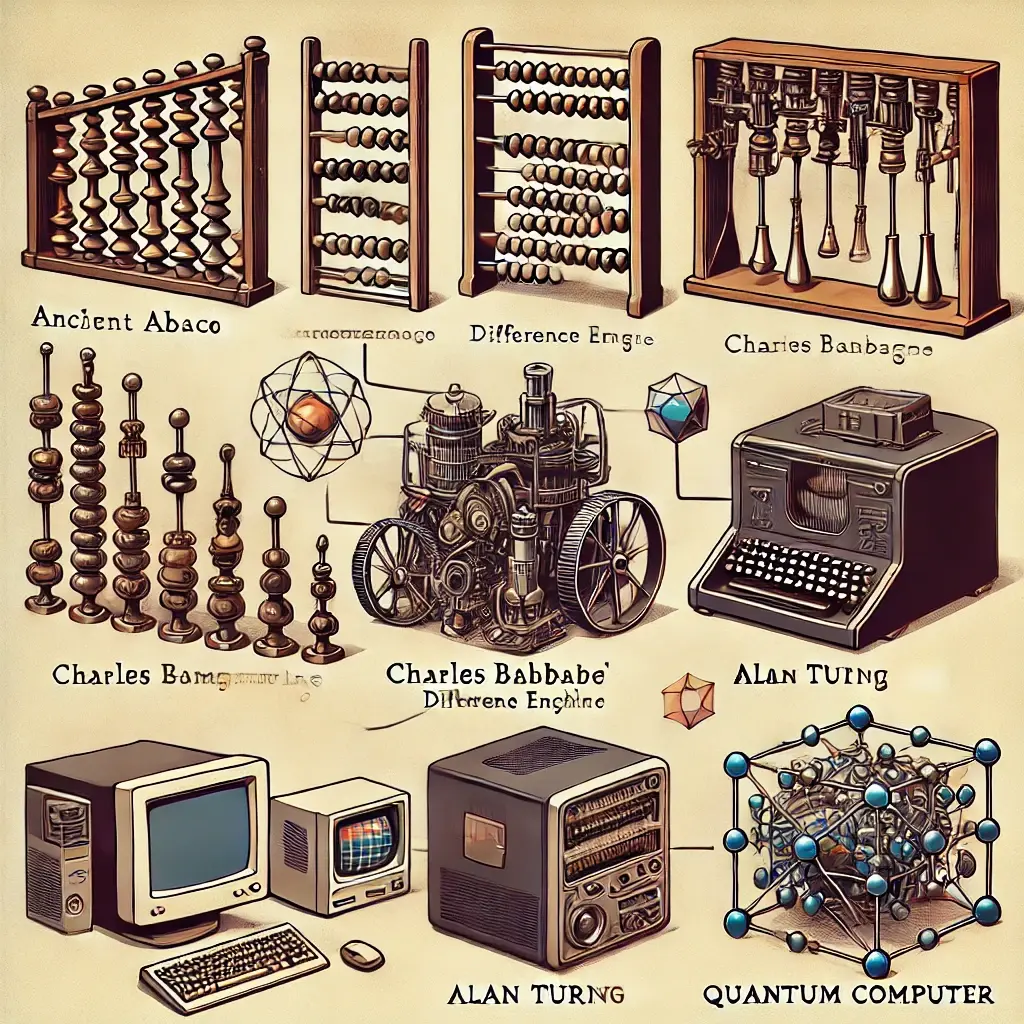

History of computers

Today we know how computers rapidly change when I was a child I didn’t have a mobile phone, but today almost everyone has a mobile phone and laptop. In the 1800s the world didn’t have a digital computer instead early machines were analog means which worked on continuous data. Computer change Analog – Digital – Quantum. so today in 2024 what do you think you have please give this answer to contact@widelamp.com and we will be happy to see your answers.

Charles Babbage and the “Analytical Engine”

Let’s start by focusing on the history of computers. after the 1800s English mathematician Charles Babbage introduced the first digital computer this is one of the the most famous early concepts for a computing machine Charles Babbage designed the analytics engine in the 1830s which is often called the first general purpose computer.

Charles Babbage idea was ground-braking because this machine is programmed, in theory, to do different tasks. A huge leaf forward basic computer.

Features of the analytical engine

Input and Output: Information could be fed into the machine and results could come out

Memory: The machine could store numbers and use them later.

Processing Unit: It can perform calculations automatically.

The Digital Revolution: Alan Turing and the Birth of Modern Computing

The transition from analog to digital began in the early 20th century, leading to the computers we use today. One of the key figures in this transformation was Alan Turing, a British mathematician and logician.

Alan Mathison Turing and the Turing Machine

In 1936 Alan Turning Introduced the Turning Machine, this is a theoretical model that could solve any problem. we also know today computer work as per Truing Machine. While it was not a physical machine it helped define what a computer could do and how they process information. The Turing Machine worked on these principles.

Processing Instructions: It reads instructions from a tape, symbol by symbol.

Execution of Commands: The machine followed commands to manipulate data.

Storage of Data: It could store information on the tape and use it later.

Turing’s ideas laid the foundation for modern computer science, especially the concept of algorithms—step-by-step instructions for solving problems.

The Enigma and World War II

During World War II, Turing’s skills became even more important. He worked at Bletchley Park, the British codebreaking center, and helped crack the Enigma code, which the Germans used to send secret messages. The machine he built for this task, called the Bombe, was a major leap in computer development, as it could process information faster than humans.

The Digital Age: From Early Computers to Modern Devices

By the 1940s, the first fully digital computers were being built. These machines used electrical signals (binary code) to process information. Unlike analog computers, digital computers work with discrete values (0s and 1s), which means they’re faster, more reliable, and capable of more complex tasks.

Key Milestones in Digital Computing

- ENIAC (1945): One of the first fully digital computers, ENIAC (Electronic Numerical Integrator and Computer) was huge and filled an entire room. It could calculate faster than any previous machine and marked the beginning of modern computing.

- Transistors (1947): The invention of the transistor replaced bulky vacuum tubes and made computers smaller, faster, and more efficient. This breakthrough led to the development of personal computers in the 1970s and 1980s.

- The Internet (1960s-1990s): Originally developed for military use, the Internet transformed how computers communicate, allowing people to share data instantly. By the 1990s, it became a major part of everyday life.

The Future: Quantum Computers

Now, we’re entering a new era of computing—quantum computing. Unlike classical computers, which use bits (0s and 1s), quantum computers use qubits, which can represent 0 and 1 at the same time. This allows quantum computers to process massive amounts of data simultaneously.

While quantum computers are still in the experimental stage, they have the potential to solve problems that even today’s fastest digital computers can’t handle, like simulating complex chemical reactions or improving artificial intelligence.

How to Use This Knowledge

Understanding the evolution of computers helps us appreciate the devices we use every day. Computers have grown from Babbage’s mechanical designs to powerful digital systems, and now, they’re moving toward quantum computing. As technology advances, knowing where it came from can give us insight into where it might go in the future.

Q & A – Section

Questions that are very helpful for everyone and clear some doubts…

What is the difference between analog and digital computers?

Analog computers work with continuous data, such as physical quantities (like temperature or speed). Digital computers, on the other hand, work with discrete values, using binary code (0s and 1s) to process information. Digital computers are faster, more reliable, and capable of more complex tasks.

Who was Charles Babbage, and what did he invent?

Charles Babbage was an English mathematician who designed the Analytical Engine in the 1830s. It is considered the first general-purpose computer. Though it was never built in his lifetime, the Analytical Engine had key features of modern computers, like input/output, memory, and the ability to perform automatic calculations.

Why is Charles Babbage’s Analytical Engine considered a major milestone in computing history?

The Analytical Engine is considered a major milestone because it was the first machine that could be programmed to do different tasks. This was a huge leap from simple calculators, making it the first design that could be considered a general-purpose computer.

What is the Turing Machine, and why is it important?

The Turing Machine, introduced by Alan Turing in 1936, is a theoretical model that could solve any computational problem. It laid the foundation for modern computer science and helped define what computers can do, especially with its use of algorithms to process and store information.

How did Alan Turing contribute to the Allied effort during World War II?

During World War II, Alan Turing worked at Bletchley Park to break the German Enigma code, which the Germans used to send secret messages. He developed a machine called the Bombe that could quickly process large amounts of encrypted information, significantly helping the Allied forces.

What was ENIAC, and why is it significant?

ENIAC (Electronic Numerical Integrator and Computer) was one of the first fully digital computers, built-in 1945. It was huge and filled an entire room. ENIAC could calculate much faster than any previous machines and is often considered the start of the modern computing era.

What invention replaced vacuum tubes in computers, making them smaller and faster?

The invention of the transistor in 1947 replaced bulky vacuum tubes. Transistors made computers smaller, faster, more efficient, and cheaper, paving the way for personal computers.

How did the Internet revolutionize computers and communication?

The internet, initially developed for military use, transformed how computers communicate. It allowed computers to share information instantly across the globe. By the 1990s, the internet had become a major part of everyday life, revolutionizing communication, business, and entertainment.

What makes quantum computers different from digital computers?

Unlike digital computers, which use bits (0s and 1s), quantum computers use qubits, which can represent 0 and 1 simultaneously. This allows quantum computers to process enormous amounts of data at once, making them incredibly powerful for certain types of complex problems.

Why is understanding the history of computers important for the future?

Understanding the history of computers helps us appreciate the devices we use today and provides insight into how technology evolves. From mechanical designs like Babbage’s Analytical Engine to today’s digital and quantum computers, each advancement builds on the last, showing us where future innovations might lead.